What is a Neural Network?

Learn what a neural network is and how it works conceptually. No hard math, just logic.

Forget everything you think you know about machine learning for a moment. At its core, a neural network is just a clever way to approximate a function when you don't know the rules of that function. As an engineer, you're used to writing explicit rules: if x > 5, return "foo".

A neural network is what you build when you have a million examples of inputs and their desired outputs, but no earthly idea how to write the if/then statements to connect them.

The Simplest Building Block: The Neuron

Imagine a single, simple function. This function takes a few numbers as input, multiplies each by a specific "importance" value, adds them up, and then decides if the result is big enough to matter.

That's a neuron. In slightly more technical terms:

- Inputs: It receives one or more numbers (input data).

- Weights: Each input is multiplied by a "weight." Think of a weight as a knob that dials the importance of that specific input up or down. A high weight means this input is very influential; a low weight means it's less so.

- Sum & Bias: All the weighted inputs are added together. A "bias" is then added, which acts like a general offset, making it easier or harder for the neuron to activate.

- Activation: The final sum is passed through an "activation function." This is a simple check that decides the neuron's output. A common one is: if the sum is greater than zero, output the sum; otherwise, output zero. This is what allows the neuron to "fire" or "stay quiet".

And that's it. A neuron is just a tiny decision-maker. On its own, it's not very smart. Its power comes from working in a team.

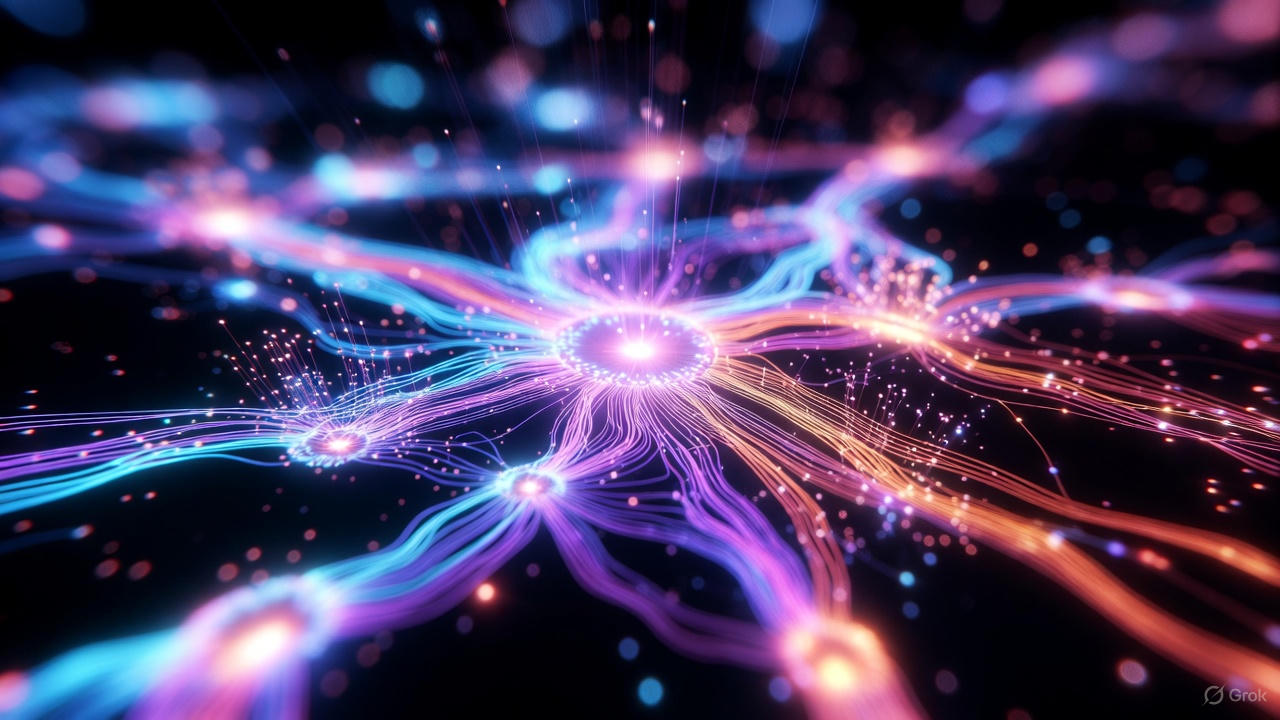

Stacking Neurons into a Network: The Layers

A neural network is simply a collection of these neurons organized into layers.

- Input Layer: This is the network's front door. It takes in your raw data. For example, if you're trying to determine if an image is of a cat or a dog, each neuron in the input layer might correspond to the brightness of a single pixel.

- Hidden Layers: These are the layers of neurons between the input and the output. This is where the magic happens. The output of one layer of neurons becomes the input for the next. Each layer learns to recognize increasingly complex patterns. The first hidden layer might learn to detect simple edges from the pixels. The next layer might combine those edges to recognize shapes like ears or whiskers. The layer after that might combine those shapes to recognize a cat's face.

- Output Layer: This is the final layer that produces the result. For our cat/dog example, it might have two neurons: one for "cat" and one for "dog." The one that fires with a higher value is the network's prediction.

Information flows from the input layer, through the hidden layers, to the output layer. This is why it's often called a "feed-forward" network.

How a Network "Learns": The Art of Adjusting the Knobs

So we have a network of neurons, each with its own "weight" (importance) and "bias" (offset) knobs. When we start, all these knobs are set to random values. The network is an ignorant amateur. It will make terrible predictions.

The process of "training" is how we turn this amateur into an expert. It's a simple, repeated loop:

- Make a Guess (Prediction): We feed the network an input (e.g., an image of a cat) and let it make a prediction. With its random knob settings, it might say "dog" with 80% confidence.

- Measure the Error (Loss): We compare the network's prediction to the correct answer (we know it's a cat). The difference between the guess and the truth is the "error" or "loss." In this case, the error is high.

- Adjust the Knobs (Optimization): This is the clever part. We use a mathematical process (most commonly an algorithm called "back propagation" paired with an "optimizer") to figure out which knobs (weights and biases) were most responsible for the error. Did a specific neuron in a hidden layer fire too strongly? Let's turn its weight down a bit. Was another one too quiet? Let's nudge its bias up.

- Repeat. A Lot. We repeat this guess-measure-adjust cycle with thousands or millions of examples from our dataset. Each time, we make tiny, incremental adjustments to the knobs. Over time, these adjustments collectively teach the network to recognize the intricate patterns that differentiate cats from dogs.

When we say a model has "learned," we simply mean that its vast collection of weights and biases have been adjusted to values that are very good at transforming inputs into correct outputs for a specific task. These weights and biases are the "parameters" of the model.

From Simple Networks to LLMs

An LLM is this exact concept, but scaled up to an almost unimaginable degree and with a special architecture called the Transformer.

Here are the key upgrades:

-

From Pixels to Words (Embeddings): LLMs don't take in pixels; they take in text (talking about the simple text to text models for simplicity). But how does a network do math on a word like "king"? It uses a technique called embedding. Imagine a giant dictionary where every word is converted into a list of numbers (a vector). These numbers aren't random; they represent the word's position in "meaning space." For example, the vectors for "king" and "queen" would be mathematically close to each other. This is how the model understands relationships between words.

-

Understanding Context (The Attention Mechanism): When you read the sentence,

The robot picked up the heavy metal object because **it** was magneticyou know that "it" refers to the "object," not the "robot". Early neural networks struggled with this. The Transformer architecture's key innovation is the attention mechanism. This allows the model, when processing one word, to "pay attention" to all the other words in the input and decide which ones are most relevant for understanding the current word's context. It's a powerful way of tracking relationships across long stretches of text.

Conclusion

At its heart, a neural network is a powerful pattern-matching machine. We've seen how a collection of simple, interconnected "decision-makers" (neurons) can learn, through a process of trial and error, to recognize complex patterns in data. By adjusting its internal "knobs" (the parameters), the network moves from a state of random guessing to one of expert intuition, capable of classifying an image of a cat from a dog.

We've also touched on how this fundamental concept is the bedrock for the massive Large Language Models that dominate AI today. They are built from the same core principles but are scaled up and enhanced with sophisticated techniques like embeddings and attention to handle the unique complexities of human language.

But so far, our network's job has been to look at a completed input and provide one final answer—a classification. This is an incredibly useful skill, but it is not the creative, generative power that has captured the world's imagination. How do we get a machine that is built to be a classifier to become a creator? How do we make the leap from a system that recognizes a cat to one that can write a poem about one?

Next Up: "From Classifier to Creator: The Generative Leap"

The answer is a surprisingly elegant shift in perspective. In our next article, we will explore the generative leap—the fundamental change in a neural network's task that transforms it from a pattern-recognizer into a pattern-producer. We will demystify how a model like an LLM can write a coherent paragraph by focusing on a profoundly simple, iterative task: predicting the very next word. Join us as we bridge the gap from a machine that sorts to a machine that speaks, and uncover the core mechanism that powers all modern generative AI.