So, What is RAG?

A beginner-friendly introduction to Retrieval-Augmented Generation (RAG) and why it matters in the world of AI.

Imagine This

You ask your friend a question: “Hey, who won the Best Picture Oscar in 2022?”

If your friend hasn’t kept up with the news, they might guess. That guess could be wrong.

Now imagine your friend quickly opens Google, reads the latest news, and then answers you. That’s much more reliable.

That’s exactly what RAG (Retrieval-Augmented Generation) does for AI.

Breaking Down the Buzzword

Retrieval-Augmented Generation sounds complicated, but let’s split it:

- Retrieval → finding the right information from a knowledge source (like documents, databases, or the web).

- Generation → using an AI model (like ChatGPT or GPT-4) to create a natural language answer.

Put together: RAG = AI that doesn’t just “guess” from memory, but also looks things up first.

Why Do We Need RAG?

LLMs (Large Language Models) like ChatGPT are like students who studied up until a certain year. They’re super smart, but they don’t know what happened yesterday, and sometimes they hallucinate (make stuff up confidently).

RAG helps fix this by:

- Providing up-to-date info → “What’s the stock price today?”

- Grounding answers in trusted sources → “According to your company’s HR policy…”

- Reducing hallucinations → Less guessing, more fact-based answers.

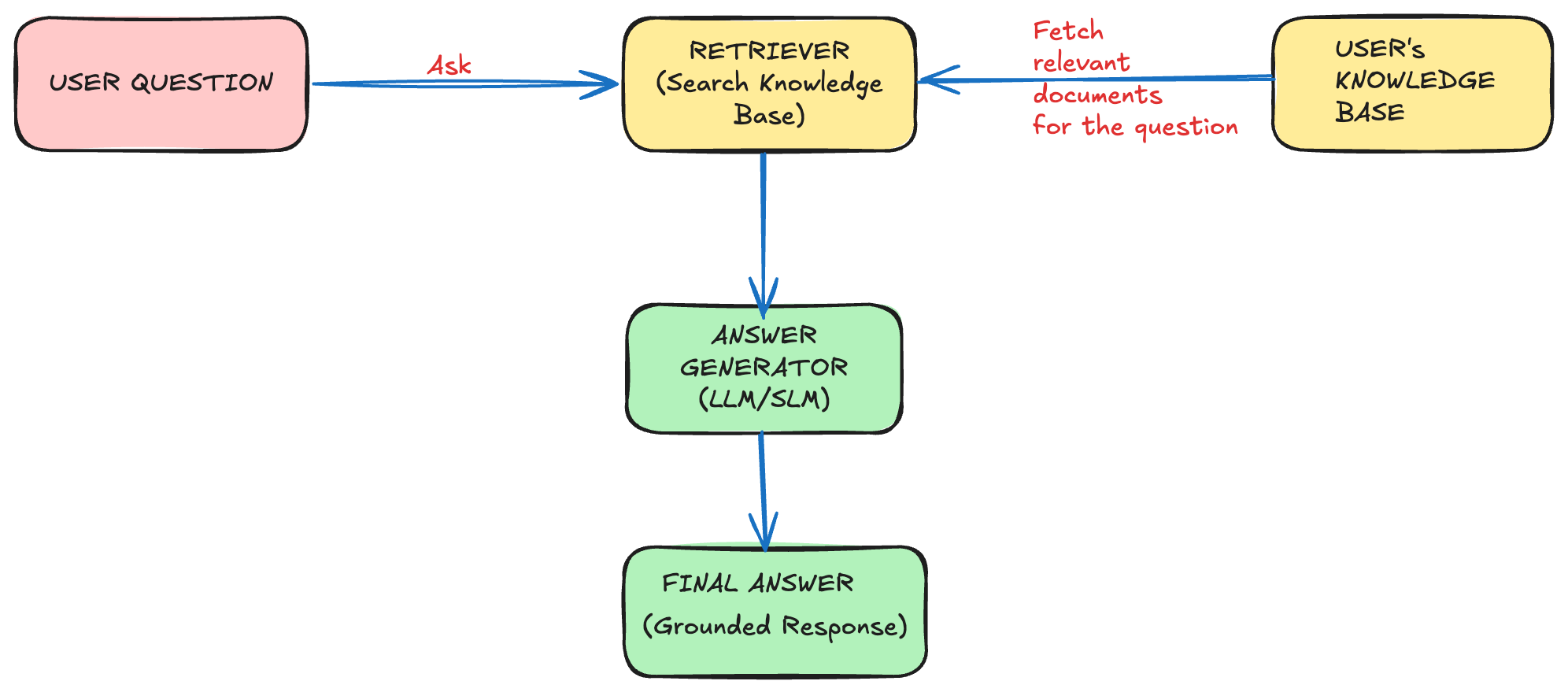

How Does RAG Actually Work? (Simplified)

Think of it as a three-step process:

-

You ask a question. Example: “Summarize the top 5 customer complaints from last month.”

-

Retriever kicks in. The system searches your database (emails, PDFs, documents) and pulls out the most relevant pieces.

-

Generator answers. The AI reads those pieces and generates a natural, conversational response.

So instead of saying “I don’t know” or making something up, the AI gives you an answer based on real documents.

Everyday Examples of RAG

- Customer Support: AI chatbots that can answer based on your company’s FAQ or ticket history.

- Healthcare: Doctors asking an AI to pull patient records and summarize history.

- Workplace Search: Instead of hunting through 100 PDFs, ask AI: “What’s the refund policy in our contract with Vendor X?”

- Personal Use: Upload all your notes or e-books, then ask, “Remind me what Chapter 3 said about resilience.”

RAG vs. Just Training an AI (Fine-Tuning)

- Fine-tuning = teaching the AI during training (slow, expensive, and fixed).

- RAG = feeding the AI new knowledge on the fly (fast, flexible, and cheap).

With RAG, you don’t have to re-train the model every time your information changes.

Why Everyone Is Talking About RAG

Because it’s the bridge between “cool AI demos” and real-world use.

- Enterprises can connect their data without retraining giant models.

- Developers can build smarter apps with less cost.

- Everyday users get answers that actually make sense for their context.

Quick Analogy to Remember

Think of an AI without RAG like a student answering an open-book test from memory only. Now think of an AI with RAG like a student allowed to open the textbook, skim the right page, and then answer.

Who would you trust more? Exactly.

Key Takeaways

- RAG = Retrieval + Generation.

- It helps AI stay accurate, up-to-date, and context-aware.

- It’s cheaper and faster than retraining a model.

- It’s already powering real apps in customer service, enterprise search, and more.

Next time you hear "RAG pipeline" or "RAG chatbot," just remember: It's simply AI looking things up before answering you.